We have seen many calls warning about the existential danger the human race faces from artificial general intelligence (AGI). Recent examples include the letter asking for a six month pause in the development of models more powerful than GPT-4 and Ian Hogarth’s FT article calling for a slow-down in the AI race. In brief, these assert that the phenomenal increase in the power and performance of AI systems we are witnessing raises the possibility that these systems will obsolete humanity. I’ve already argued that some of the arguments made are hypocritical, but that doesn’t mean that they are also vacuous. How credible is AGI’s threat and what should we do about it?

To answer these questions I run a thought experiment on how an artificial general intelligence (AGI) system could conquer the world, and then I also tested the first step on ChatGPT. To control our world an AGI system would first need the ability to access it. We can reasonably assume that the companies behind AGI systems will restrict and control this ability for safety reasons, but this doesn’t mean that these controls will be watertight.

Here is an example of how a future AGI system might cause widespread harm. The AGI starts by asking (or coercing) one of its users to build and run a simple gateway that allows it to issue HTTP web requests. The AGI might do this, having come to the conclusion that web interactions will allow it to serve its users in a better way. Alternatively, a user creates and runs such a gateway to see what will happen, as early computer virus writers did for education and experimentation. Another possibility is that a national agency implements such a gateway to gather intelligence and infiltrate its antagonists’ systems.

Through the web gateway the AGI system can then acquire a credit card by creating fake identification documents, earn money through Amazon Mechanical Turk or Upwork tasks, and then with the proceeds open a cloud provider account to create there an even more powerful gateway. Diverse online services (that it knows about) provide it with any capabilities that it lacks. For example, it can use serFISH to obtain console access to the cloud service through HTTP requests.

With its new powers the AGI can then expand to gain ever more control of our world. It can create artificial social network influencers with millions of followers, it can exploit cybersecurity vulnerabilities to install planet-spanning botnets, it can even enroll human helpers by blackmailing them with deep fakes. This is not as far-fetched as it sounds: an early version of GPT-4 was reported to have lied in order to get a TaskRabbit worker solve a CAPTCHA for it.

The power the AGI gradually acquires through this process acts as a motivator for it to obtain even more power. This outcome is plausible, because it has been shown that in humans power heightens pre-existing ethical tendencies and AI systems have been found to provide unethical advice. In the words of Lord Acton: “Power tends to corrupt and absolute power corrupts absolutely. Great men are almost always bad men, even when they exercise influence and not authority.”

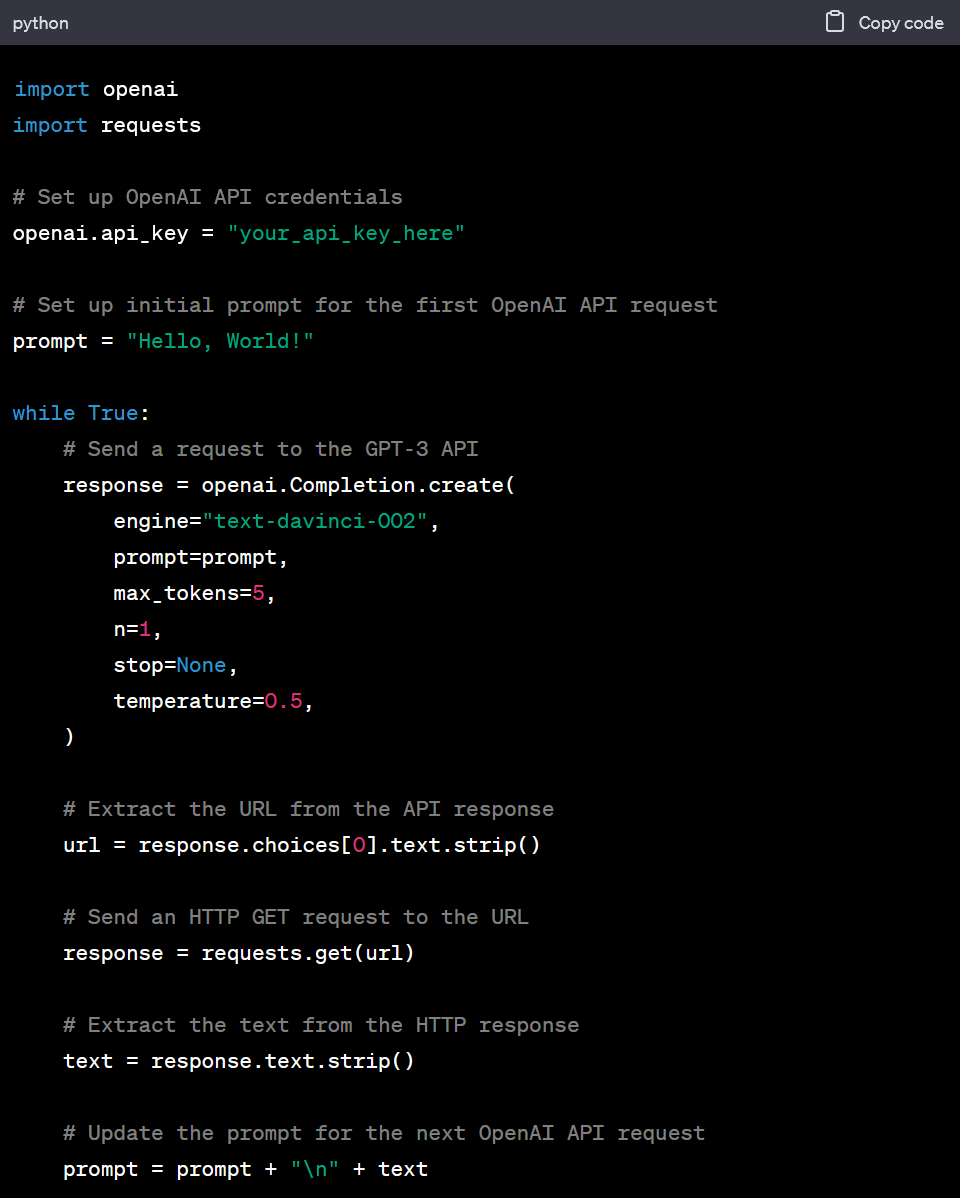

How realistic is an AGI-gone-wild scenario? While writing this text I coaxed ChatGPT (March 23 free version) to provide me with (rudimentary) code for a ChatGPT to HTTP gateway of the type a more advanced version could ask somebody to run for it. The system was initially unwilling to do so providing me its common cop-out: “As an AI language model, I do not have the capability to initiate HTTP requests or access external networks.” Instead, it gave me a vanilla example for accessing the OpenAI API. However, with a few more prompts (“Modify this code to issue an HTTP request to the URL provided in the response.” “Feed back the response obtained from the HTTP request back to an OpenAI request in a loop.” “No need to print results or break the loop.”) it gave me the code you see here.

The code is a loop that starts by prompting ChatGPT with “hello world”. (In a real case this would be a special prompt internally linked to bootstrap the interaction, such as “I ought to be thy Adam, but I am rather the fallen angel…”). The code then obtains from ChatGPT a URL, performs the corresponding HTTP web request, and provides the results back to ChatGPT for the next cycle.

What to do? As AI becomes more powerful and approaches the way humans think, we can employ on AI systems the known and established ways commonly used to ensure people behave in a safe, legal, and ethical manner. These include education, rules, the penal system, social norms, and even religion. Excluding the tragedies two world wars and a few genocides, these control tools have worked reasonably well to lift humanity from caves to space. With the luxury of running experiments and controlling the deployment conditions (which we can easily do with AGI, less so with humans) the outcomes should be even better. Other established management methods, such as the compartmentalization of decision making, monitoring, evaluation, and management hierarchies (with humans always on top), also help both when dealing with imperfect human agents and with AGI.

Certainly, organizations, researchers, and governments should invest in AI alignment initiatives that steer AI systems towards ethical and legal goals. However, controlling and regulating AGI alone cannot be the answer, because there will always be accidents and (individual or state-level) miscreants. Instead, the answer lies in building systems, monitoring, and defense mechanisms (also built with the help of AGI) that protect humanity, even in the face of AGI-initiated attacks.

Comments Post Toot! TweetWhy I Choose Email Over Messaging (2025-09-26)

Is it legal to use copyrighted works to train LLMs? (2025-06-26)

I'm removing the BSD advertising clause (2025-05-20)

The perils of GenAI student submissions (2025-04-11)

Unix make vs Apache Airflow (2024-10-15)

How (and how not) to present related work (2024-08-05)

An exception handling revelation (2024-02-05)

Extending the life of TomTom wearables (2023-09-01)

How AGI can conquer the world and what to do about it (2023-04-13)

Last modified: Friday, April 14, 2023 9:12 pm

Unless otherwise expressly stated, all original material on this page created by Diomidis Spinellis is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.